Artificial intelligence is no longer just a tool for software development. It’s a game-changer. AI-powered code assistants are transforming how developers write, review, and maintain code, helping them work faster and smarter. However, as organizations embrace AI assistants like GitHub Copilot, data privacy and intellectual property protection concerns continue to rise.

Most cloud-based AI solutions require sending code to external servers, posing a security risk for enterprises handling sensitive information. The solution? Running AI coding assistants locally. Organizations can maintain full control over their data by keeping everything in-house while benefiting from AI-driven development.

In this post, we’ll explore four top local AI coding assistants, breaking down their features, limitations, and best use cases so you can find the right fit for your team.

1. Continue – The Easiest Drop-in Replacement

Continue is a leading open-source AI code assistant designed as a seamless alternative to Copilot. With support for auto-completion, chat, and action-based workflows, it’s one of the most versatile tools available. Plus, it works with both VS Code and JetBrains IDEs, making it a great fit for a wide range of developers.

One of Continue’s biggest strengths is its frictionless installation. The setup process happens directly within your IDE, walking you through each step to ensure it’s configured correctly.

Pros

- Easy installation with guided setup

- Supports both VS Code and JetBrains

- Offers auto-completion, chat, and actions

Cons

- Limited to local Ollama instances (no remote model support)

- Modifying the AI model is less intuitive compared to other tools

Bottom line: If you’re looking for a simple and effective local Copilot alternative with great IDE support, Continue is a solid choice.

2. Llama Coder – Greater Model Flexibility

Llama Coder is another open-source Copilot replacement with a straightforward setup. It requires Ollama and a plugin installation in VS Code. Unlike Continue, Llama Coder offers more flexibility in model selection, allowing users to connect to a remote Ollama instance.

However, Llama Coder lacks chat functionality, which might be a dealbreaker for those who rely on AI-powered conversations for debugging or brainstorming.

Pros

- Easily switch between AI models

- Supports remote Ollama instances

Cons

- No chat mode – only auto-completion

- Development has stalled (last commit was in April 2024)

Bottom line: If you need flexible AI model support and don’t mind the lack of chat mode, Llama Coder is a strong contender. However, the pause in development raises long-term concerns.

3. Privy – The Most Flexible Runtime

Privy is another local Copilot alternative that includes a chat mode, unlike Llama Coder. It also stands out for its broad runtime compatibility—it supports Ollama, Llama files, llama.cpp, and even limited OpenAI integration.

However, like Llama Coder, Privy’s development appears to be inactive, with the last repository update in February 2024.

Pros

- Supports multiple runtime options (Ollama, Llama files, llama.cpp)

- Includes chat mode

Cons

- Development has stalled (no updates since February 2024)

Bottom line: If you need the widest range of runtime options and don’t mind the lack of active development, Privy is worth considering.

4. Tabby – The Enterprise-Ready AI Assistant

Tabby differs from the other options in that it’s designed for team use. While it can run locally, it also supports Docker deployment, allowing teams to run a shared AI coding assistant. Tabby doesn’t use Ollama, instead opting for a custom AI engine.

In terms of editor support, Tabby offers the most comprehensive compatibility, working with VS Code, JetBrains, and even Vim/NeoVim. However, unlike the previous options, Tabby is not completely free. It has a free tier for up to 5 users, but additional features require a paid license.

Pros

- Supports multiple editors (VS Code, JetBrains, Vim/NeoVim)

- Can be self-hosted or deployed via Docker

- Includes enterprise-grade team collaboration features

Cons

- Not entirely free (only free for up to 5 users)

Bottom line: For organizations looking for an enterprise-ready AI assistant, Tabby is the best option. Its pricing may be a drawback, but the collaboration features and broader editor support justify the cost.

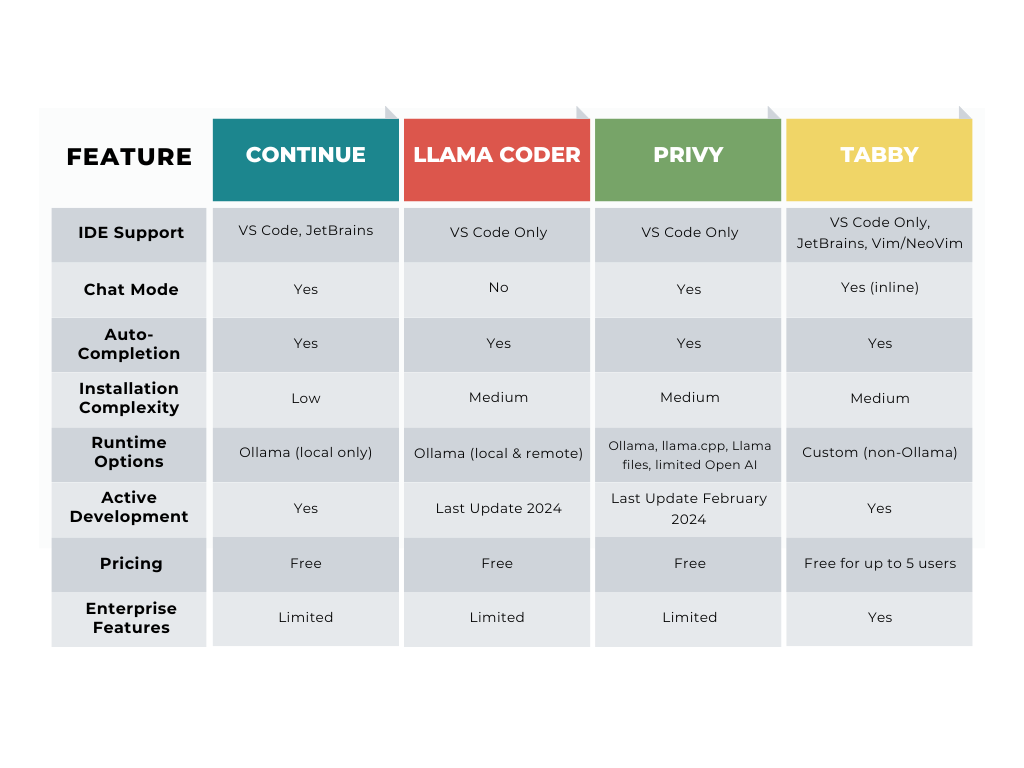

AI Code Assistant Comparison Table

Final Thoughts: Which AI Code Assistant Should You Choose?

Each of these AI coding assistants has unique strengths depending on your needs:

- For the best overall user experience: Continue is the easiest to set up and supports both VS Code and JetBrains.

- For maximum model flexibility: Llama Coder allows remote Ollama instances, though it lacks chat mode.

- For the broadest runtime options: Privy supports multiple LLMs, but its inactive development could be a concern.

- For enterprise-grade solutions: Tabby is the best choice, offering multi-user support and Docker deployment at a cost.

As AI evolves, developers and organizations must balance functionality, security, and long-term sustainability when choosing the right coding assistant. If local AI development is a priority for your team, these four tools provide a strong foundation to enhance productivity without compromising privacy.

Which AI coding assistant are you considering?

Want to explore how AI-powered development can revolutionize your business? Let’s talk. RBA specializes in cutting-edge AI, software engineering, and digital strategy solutions designed to help enterprises build, innovate, and scale with confidence.